Another year, another collection of photos

As usual made with few tweaks of this gist. The featured image made with a similar script. Got a tag for these things now: flickr year, need to find a few posts. I’ve been doing these since 2014 time flies!

Another year, another collection of photos

As usual made with few tweaks of this gist. The featured image made with a similar script. Got a tag for these things now: flickr year, need to find a few posts. I’ve been doing these since 2014 time flies!

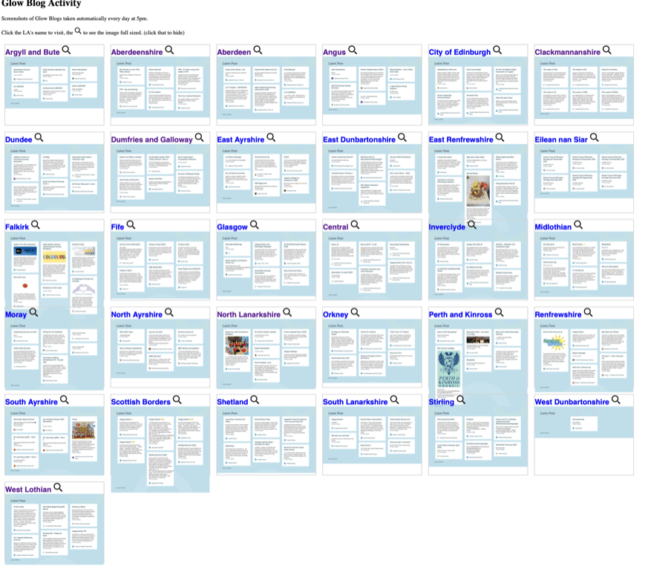

One of the things I am interested in as part of my work on Glow Blogs is what people are using Glow Blogs for.

Glow Blogs is made of of 33 different WordPress multi-sites. One for each Local Authority in Scotland and one central one.

The home page of each LA lists the last few posts. Visiting these pages will give you an idea of what is going on. In the past I’ve opened up each L.A. in a tab in my browser and gone through them. I had a script that would open them all up. I’ve now worked out an easy way to give a quick overview.

Recently I noticed shot-scraper ,Tools for taking automated screenshots of websites . I’ve used various automatic webpage screenshot pages in the past. These have usually been services that either charge money or have shut down. I used webkit2png a wee bit, but ran into now forgotten problems, perhaps around https?

shot-scraper can be automated and extended. It is a command line tool and using these is always an interesting struggle. I usually just follow any instructions blindly, searching any problems as I go. In this case it didn’t take tool long.

Once installed shot-scraper is pretty easy to use. shot-scraper https://johnjohnston.info Dumps an image johnjohnston-info.png

There are a lot of options, you can output jpegs rather than pngs. Run some javascript before taking a screenshot or wait for a while. you can even choose a section of the page to grab.

So I can use shot-scraper to create screenshots of each LA homepage. Then display them on a web page for a quick overview of Glow Blogs.

#!/bin/bash

cd /Users/john/Documents/scripts/glowscrape/img

URLLIST="ab as ac an ce cl dd dg ea ed el er es fa fi gc glowblogs hi in mc my na nl or pk re sa sb sh sl st wd wl"

for i in $URLLIST ;

do

/usr/local/bin/shot-scraper -s "#glow-latest-posts" -j "jQuery('.pea_cook_wrapper').hide()" --quality 80 https://blogs.glowscotland.org.uk/"$i" -o "$i".jpg && continue

done;

This first hides the cookie banner displayed by blogs and then screenshots the #glow-latest-posts section of the page only.

The script continues by copying the image over to my raspberry pi where they are shown on a web page

I hit a couple of problems along the way. The first was that the script stopped running when it could not find the #glow-latest-posts section. This happens on a couple of LAs who have no public blogs. adding && continue to the screenshot fixed that.

The second problem came when I wanted to run the script regularly. OSX schedules tasks with launchd. I’ve used Lingon X to schedule a few of these. Since I recently updated my system I first needed to get a new version of Lingon X. I then found that increased security gave me a few hoops to jump through to get the script to run.

I think it would have been simpler to do the whole job on a raspberry pi. But I was not sure if it would run shot-scraper. I’ll leave that for another day and a newer pi.

This is a pretty trivial use of a very powerful tool. I’ve now got a webpage that gives me a quick overview of what is going on in Glow Blogs and took another baby step in bash.

The first thing that surprised me was the lack of featured Images on the blog posts. These not only make the LA home pages took nicer they also make display blog posts on twitter more attractive.

Since 2014 I’ve been making “movies” with my flickr photos for the year. I make them with a script which downloads the years photos puts them together into a movie and, use to, add music. The Music bit is broken (https) so I downloaded some manually.

This year pretty much stopped in October, then I got covid in November and have not been out much since.

I also average the photos ( below) and montage them for the featured image. This year I made a version of the script to download wee square images for the montage (average & montage scripts here).

I enjoy both the process and watching my photos flickr by. I like the fact that I can easily tweek bit of the script or run the video creation again quickly to try out different speeds, music etc.

Hi Aaron,

This is a useful guide. I remember Oliver Quinlan, a guest on Radio EDUtalk talking about the eloquence of the command line compared to pointing and grunting.

I enjoy using the command line, often with Raspberry PIs, but it is easy to miss some of the basics which this guide covers well.

Sometimes working on forward plans includes a deal of tedium reorganising text. Time to google some geekiness. (I know a spreadsheet would do this but where is the fun in that)

awk -F '\t' '{ print $2 "\t " $1}' separates columns at tab & reverses them.

After seeing @adders on micro.blog posting some timelapse I though I might have another go. My first thought was to just use the feature built into phone. I then though to repurpose a raspberry pi. This lead to the discovery that two of my PIs were at school leaving only one at home with a camera. This we zero had dome sterling service taking over 1 million pictures of the sky and stitching them into 122918 gifs and posting them to tumblr. I decommissioned that when tumblr started mistaking these for unsuitable photos.

My first idea were just write a simple bash script that would take a pic and copy it to my mac. I’ve done that before, just need to timestamp the image names. Then I found RPi-Cam-Web-Interface. This is really cool. It turns your pi into a camera and a webserver where you can control the camera and download the photos.

I am fairly used to setting up a headless pi and getting on my WiFi now. So the next step was just to follow all the instructions from the RPi-Cam-Web-Interface page. The usual fairly incomprehensible stuff in the terminal ensued. All worked fine though.

I then downloaded the folder full of images onto my mac and stitched them together with ffmpeg.

ffmpeg is a really complex beast, I think this worked ok:

make a list of the files with

for f in *.jpg; do echo "file '$f'" >> mylist.txt; done

then stitch them together:

ffmpeg -r 10 -f concat -i mylist.txt -c:v libx264 -pix_fmt yuv420p out.mp4

I messed about quite a bit, resizing the images before starting made for a smaller move and finally I

ffmpeg -i out.mp -vf scale=720:-2 outscaled.mp4

To make an even smaller version.

I am now on the look out for more interesting weather or a good sunset.

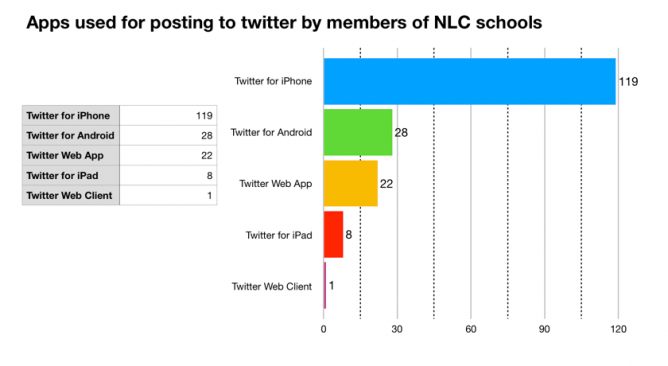

I’ve talked to a fair number of teachers who find it easier to use twitter than to blog to share their classroom learning. I’ve been thinking a little of how to make that easier but got side tracked wondering how schools, teachers and classes use twitter.

If you use twitter on the web it tells you the application used to post the tweet. At the bottom of a tweet there is the date and the app that posted the tweet.

I’ve got a list that is made up of North Lanarkshire schools I started when I was supporting ICT in the authority.

I could go down the list and count the methods but I though there might be a better way. I recalled having a played with the twitter api a wee bit so searched for and found: GET lists/statuses — Twitter Developers. I was hoping ther was some sort of console to use, but could not find one, a wee bit more searching found how to authenticate to the api using a token and how to generate that token. Using bearer tokens

It then didn’t take too long to work out how to pull in a pile of status updates from the list using the terminal:

curl --location --request GET 'https://api.twitter.com/1.1/lists/statuses.json?list_id=229235515&count=200&max_id=1225829860699930600' --header 'Authorization: Bearer BearerTokenGoesHere'

This gave me a pile of tweets in json format. I had a vague recollection that google sheets could parse json so gave that a go. I had to upload the json somewhere I could import it into a sheet. This felt somewhat clunky. I did see some indications that I could use a script to grab the json in sheets, but though it might be simpler to do it all on my mac. More searching, but I fairly quickly came up with this:

curl --location --request GET 'https://api.twitter.com/1.1/lists/statuses.json?list_id=229235515&count=200&' --header 'Authorization: Bearer BearerTokenGoesHere' | jq '.[].source' | sed -e 's/<[^>]*>//g' | sort -bnr | uniq -c | sort -bnr

This does the following:

119 "Twitter for iPhone" 28 "Twitter for Android" 22 "Twitter Web App" 8 "Twitter for iPad" 1 "Twitter Web Client"

This surprised me. I use my school iPad to post to twitter and sort of expected iPads to be highest or at least higher.

It maybe that the results are skewed by the Monday, Tuesday holiday and 2 inservice days, so I’ll run this a few times next week and see. You can also use a max_id parameter so I could gather more than 200 (less retweeted content) tweets.

This does give me the idea that it might be worth explaining how to make posting to Glow Blogs simpler using a phone.

Update, Friday, bacn to school and NLC looks like:

74 "Twitter for iPhone" 51 "Twitter for iPad" 18 "Twitter for Android" 10 "Twitter Web App" 1 "dlvr.it"

A nice WordPress video from Micro.blog’s @ross. Just the right depth and length for me.

I liked the Pummelvision service so when it went I sort of

made my own. Which lead to this:

Flickr 2014 and DIY pummelvision and 2016 Flickring by.

I went a little early this year:

I’ve updated the script (gist) to handle a couple of new problems.

I have great fun with this every time I try it, I quite like the results but the tinkering with the script is the fun bit. I sure it could be made a lot more elegant but it works for me.

These are some notes on getting some of Dave Winer’s web tools that use node running on a Raspberry pi.

I’d originally posted these on the pi, but the SD card was corrupted and I’d no back up (I’ve had that lesson a few times).

These note are not likely to be of interest to many and are somewhat abrupt.