I just found this post in my drafts, last edit September 2023, the bug mentioned is still there and I think it is worth remembering the creativity shown by my class.

For the past couple of weeks, we have been working on a micro:bit project in class. One of the interesting aspects of working with a diverse group is the need to introduce new ideas and topics to the class, especially when some students already have experience in the area. This year, my class is Primary 5, 6, and 7. The Primary 7s have already had some exposure to micro:bit and other block coding environments.

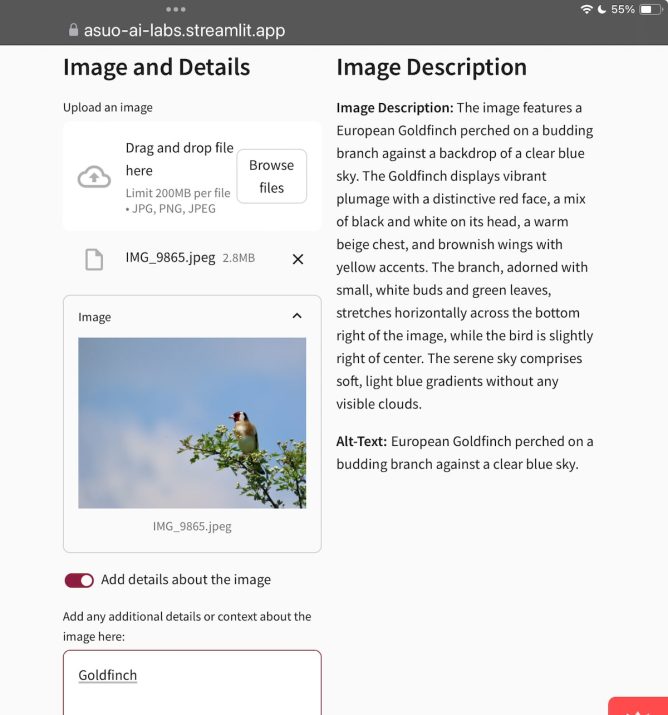

I decided to focus on the virtual pets project from the makecode site for our first project. In my classroom, we use the micro:bit app on our iPads for coding, and it and the micro:bits has had a couple of useful improvements. Since the last session, the ability to download data to the iPad has been introduced, although we haven’t explored it yet, but I hope to do so soon. Another improvement is a simpler method for resetting the micro:bit when connecting via Bluetooth, making it more accessible for smaller fingers.

I introduced the topic by discussing Tamagotchi pets, which I remember being popular in my class over two decades ago. The virtual pet project is a lot simpler in comparison, with just two features: when a pet is stroked, it smiles, and when it’s shaken, it frowns.

The limited functionality left plenty of room for exploration for the 5 primary 7s with experience. Leaving plenty of time for me to make sure the others managed the basics, linking to. Micro:bit flashing code etc. I did drop the words food and health into the conversation but didn’t go any further.

We ended up having three sessions with the micro:bits and I was delighted with the results. Between them the sevens recalled variables from last year and were off. We ended up with pets needing fed to avoid death, being sick if fed too much and getting annoyed if they were petted too much. The younger pupils managed the basics and extended them in simpler ways, animating chewing and drinking or reacting to different buttons.

One primary six who had previous experience did just as well as the primary sevens, his pet had these features:

- Sleep

- Be happy if stroked (press logo)

- Be sad if shaken

- Die (wait long enough and don’t feed it)

- Be sick if fed to much(can be cured using b)

- Be scared (by making a noise/blowing/filling the red bar 180+)

- Be fed (using A)

- Get a health check (A+B)

micro pets on the Banton Biggies

The first three were part of the class instructions, taken from the make code site, the rest were pupil ideas.

Of course lots of mistakes were made along the way, but it was great to see solutions worked out, shared with neighbours and lights go on. Quite a few pupils used wee bits of free time to explore and test ideas completely independently.

On Friday we went to post on our e-portfolios about the work and embed your pets in the blogs. Editing the shortcode to do this is a bit tricky and we also ran into a problem with the simulator not embedding properly. Half the micro:bits were hidden. At first I thought this would be a problem with Glow Blogs, but later investigation showed it to be a problem with the makecode code. We workaround it by embedding the editor rather than the simulator. I do hope the makecode folk sort this out. Since it affects their documentation too I expect they will.

I continue to be a micro:bit fan and will be using them throughout the year, hopefully incorporating it into our makerspace projects too.