#SilentSunday #birds

#SilentSunday #birds

Warm and wet today, in the afternoon the sun came out as did a few Red Admiral and Ringlet butterflies round Gartnavel.

Barassie, lots of small whites (on wild radish) & small tortoiseshells( sea rocket) along the shore. The Tortoiseshells zooming along. Some heading quite directly out over the beach. Watch a couple of common blues circling around never settling. Hot day veering to muggy.

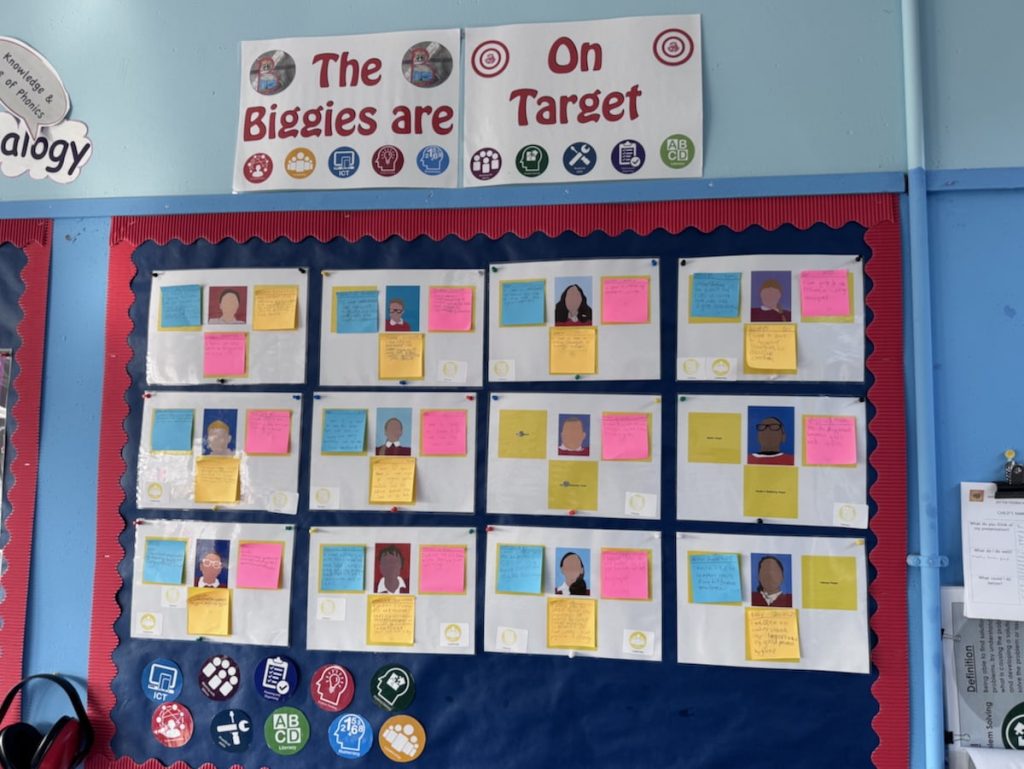

My blog got its name from an idea I tried to popularise: a Class Blog could be a wall display for everyone to see.

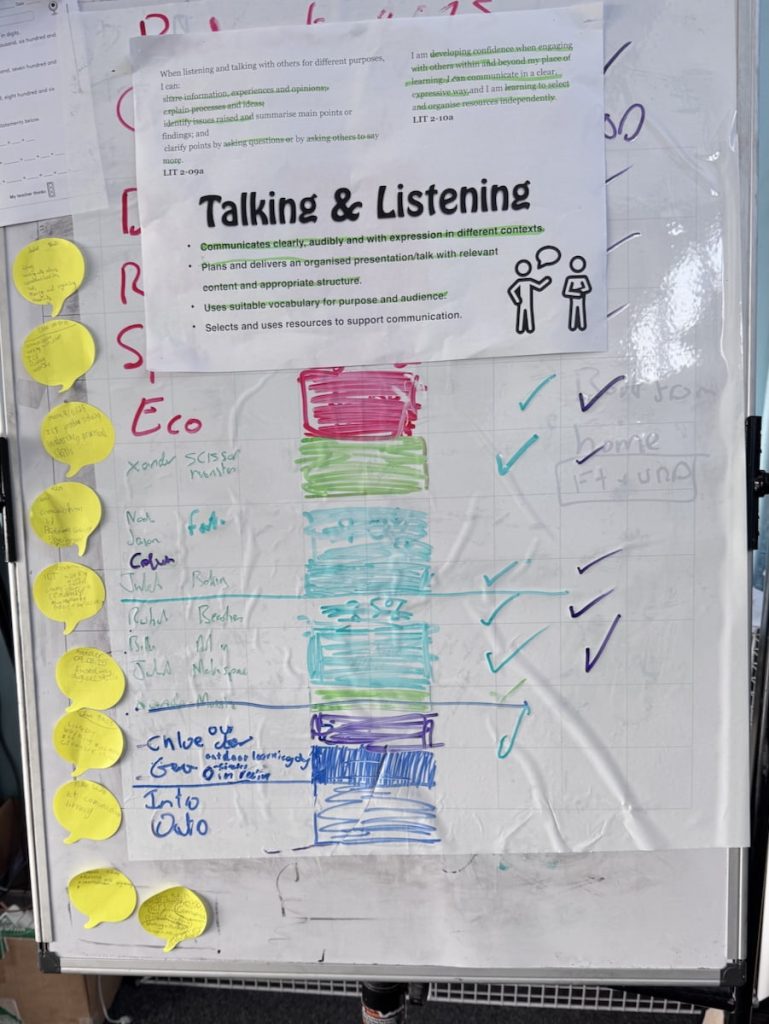

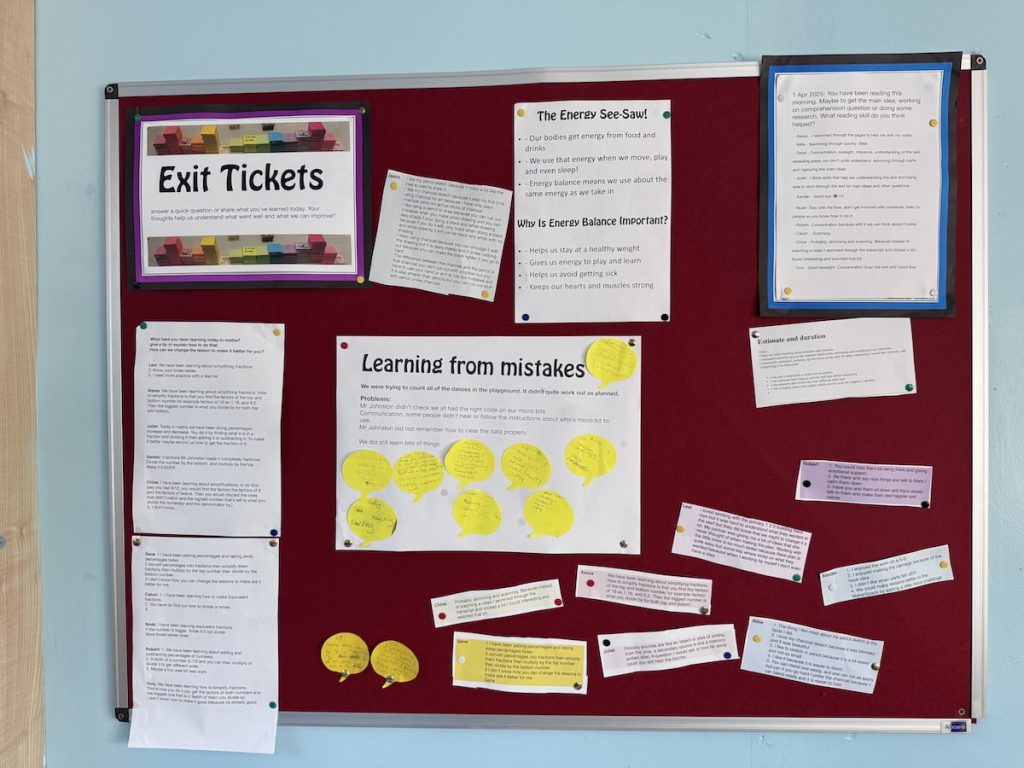

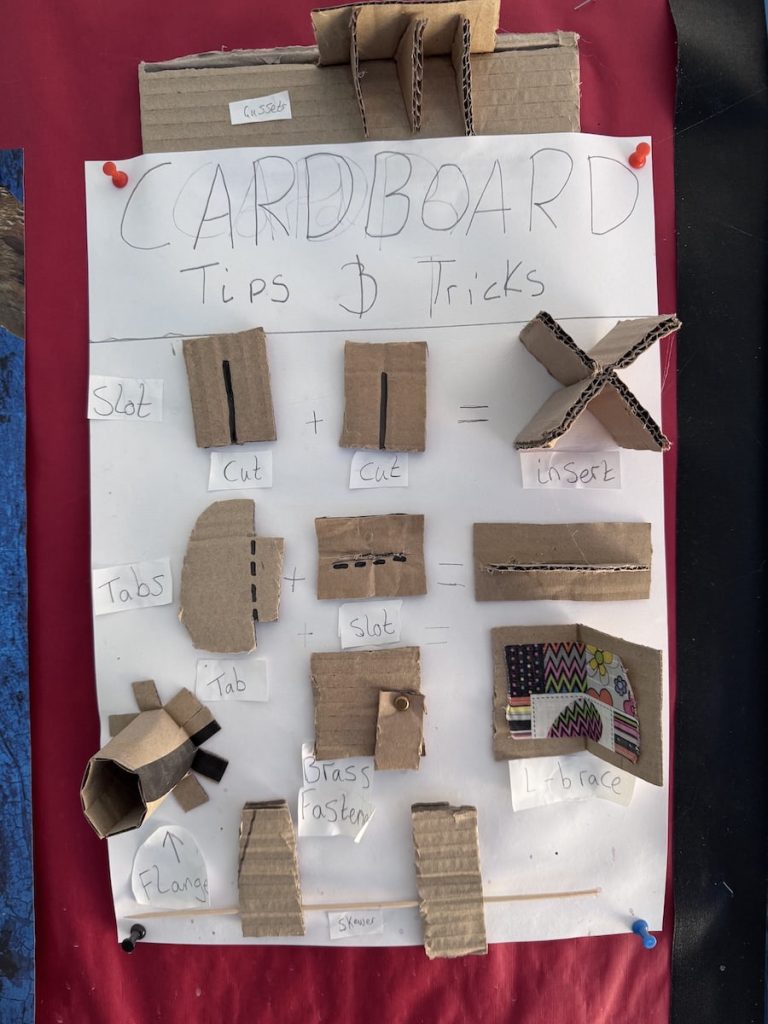

My own classroom displays tend to the messy. As I tidy up for the last time, 🎻, I took a few photos of today for my memory box.

I thought it might be worth noting this use of claide.ai. I’ve seen a wide variety of views on AI and its promise & pitfalls. When it comes to writing a wee bit of code I feel a lot of sympathy with Alan’s approach. But I have dabbled a bit and do so again this week.

I use gifsicle a bit for creating and editing gifs, it is a really powerful tool. I think I’ve a reasonable but limited understanding of how to use it. In the past I’ve used it for removing every second frame of the gif and adjusting the delay.

#!/bin/bash gifsicle -U -d 28 --colors 64 "$1" `seq -f "#%g" 0 2 20` -O3 -o "$2"

This is pretty crude and you need to manually edit the number of frames and guesstimate the new delay which will be applied to every frame1.

I know gifsicle can list the delays of each frame with the –info switch, but I do not know enough enough bash to use that information to create a new gif. I had a good idea of the pseudo code needed but I reckoned that the time it would take to read the man page and google my way to the bash syntax needed was too much for me.

This week I was trying to reduce a gif I’d made from a screen recording. It turned out a bit bigger than I had hoped. I tried a couple of application but didn’t make much of a dent. I decided to ask Claude:

I am using gifsicle/ I want to input a gif, and create a new one. Explode the gif, delete ever second frame and put an animated gif back together doubling the delay for each frame. So a gif with 20 frames will end up with 10 frames but take the same length of time. I’d like to deal with gifs that have different delays on different frames. So for example frame 1 and 2 the delays for these frames added together and applied to frame one of the new gif.

The original query had a few typos and spelling mistakes but Claude didn’t mind. After one wrong move, when Claude expected the gifsicle file name to be slightly different I got a working script and took my 957KB gif down to 352KB, that was the image at the top of the pos2t.

I had asked for the script to use gifsicle explode facility to export all of the frames. Which the script did, neatly in a temporary folder. As I typed up this post, looking at my original attempt, I realised I should not have asked for the script to explode the gif, but just grab every second frame from the original. This seemed more logical and perhaps economical, so I asked Claude to take that approach. The final script has been quickly tested and uploaded a gist: gif frame reduction in case anyone would find this useful.

Of course this has added to the pile of not quite formed reflections on AI and should we have anything to do with it. I don’t feel too guilty as I needed at least a little gifsicle knowhow to get started.

As I approach my retirement from class teaching, I thought it might be worth making some notes about how I use Glow Blogs1 in that role2. I’ve been using blogs in class since 2024. A lot of what follows is obvious stuff but there may be a nugget or two.

My current class site is the Banton Biggies – The Tallest Class in Banton Primary

This is principally a way of keeping a record of some of the more interesting things we have been doing. Informing parents and any one else interested.

When I started running a class blog, back in 2004, I had pupils doing most of the posting. A rota of pupils who posted about the previous day’s learning. I now focus on my pupils posting to their e-portfolios and some project work. I do all of the posting, I do give some ‘pupil voice’ lift quotes from the classes e-portfolios and exit tickets.

Surprisingly I now find it harder to organise rotas and give individuals time for posting than I did before CfE.

I post a lot more video now than I used to. Most via YouTube. These are often just concatenated photos that I take or short videos the pupils have created or a combination of both. I don’t spend much time editing these, using Snapthread or iMovie, Magic Movie.

I use the WordPress block editor for most things. I try to use featured images, limited number of photos in galleries with some single images. I use the quote block quite often to add text from the pupils.

I use the Embed Plus for YouTube plugin to post YouTube videos. This allows me to avoid suggested videos at the end of play. I needed to get a YouTube API key, which was a bit of a faff, but only had to be done once. The videos are unlisted in YouTube as recommended by North Lanarkshire council.

I seldom use any other blocks. I use slash, /, and start typing the name of the block to insert blocks quickly.

I find the class blog very useful for gathering information about some activity or concept that class have been involved in. I can use separate pages to gather posts on a particular topic or project. for example, I’ve posted a lot about our MakerSpace so have a page for that. There I used the Query Loop Block to gather the relevant posts and the Embed Plus to show a YouTube playlist.

Tags and categories allow me to quickly pull together evidence if asked for. Search lets me aid my failing memory to repeat lessons from a few years back or remember ideas to share.

I know that blogs are a bit more difficult to post to that X/Twitter. Twitter became the go to way to share classroom activity (maybe not so much now). I’ve found time & again that blogs are more powerful and useful. I can’t imagine teaching without one.

I post homework grids to my class blog as well as providing them physically.

My class make use of individual e-Portfolios for keeping a record of some of their learning. At one point the council encouraged schools to use them with all upper primary pupils to produce profiles. I use them to track targets and record learning in a slightly less formal way. Since I teach multi-composites some of my primary sevens have had quite impressive sites.

Hopefully some experience of using the most popular app to create website, WordPress will do some good too.

This lists and classifies the creatures we have seen in the playground or our outdoor learning trips to the woods. It has been running for just over a year and I just made it public. The site is organised around the classification of animals. So we are learning about that, lifecycles and some WordPress skills.

This site is being made by the children in the Banton Biggies class of Banton Primary. They are using it to practise their research and writing skills. The information is gleaned from the internet and we try to acknowledge sources appropriately. We may make mistakes and will try and correct them over time. Always a work in progress.

My class occasionally creates an episode for the BBP, this has turned out to be about two episodes a year. In my previous school we tried for a podcast once a month, but that was a lunchtime club. Currently my whole class is involved. We use it as an opportunity to explore, writing, collaboration and talking (more).

I’ve posted a lot about podcasting here, for example: More Classroom Podcasting & More Podcasting in the Classroom thoughts

I keep a site as a resource site for my class: Banton Buzz – Challenges, Links and Tasks for the Banton biggies. At first I tried to organise it. Now I mostly give the class links to posts and projects on the site. This means a lot of the content is not easily discoverable. I use H5P a lot (hundreds) on the site to create quizzes and activities. The vast majority of the content here goes along with the NLC spelling program for second level. But I’ve experimented with a lot of other H5P content types, e-portfolio starters, video embeds and experiments.

I’ve got blog of this name where I post short exit ticket style questions and pupils post comments. I use this as an occasional alternative to post-its and other plenary tools. I am not sure that I recommend it for anyone else, but as I am pretty familiar with the system I can quickly post a question on the fly and pop up a QR code onto our Apple TV or airdrop a link.

It was over 20 years ago when I started using Blogs in my primary classes. I feel I’ve just scratched the surface of what is possible. It is one thing I would certainly recommend to my fellow teachers as being worth the extra workload.

Usually at this time of year I am busy ‘tidying’ things into cupboards. The summer holidays are approaching. This year I am having to clean the out – nine years of accumulated stuff.

There are worksheets galore, laminated instructions and guides, old Tate gallery calendars. Books, tapes, things I’ve made to help with a task, things I thought might be useful, kids work I couldn’t throw out, old bits of technology, a tower of dvd disks I was keeping for bird scarers.

The electric trunking round my room has a collection of interesting wee things the kids have found, spider skeletons, squirrel and mouse gnawed nuts, broken egg shells feather and the like.

As someone who doesn’t have a great memory, this brings back lots. It has also makes me think of a few slightly connected things.

Primary teachers create a lot of resources. Or buy them from twinkl or similar. I thought that the flowering of edutech might have delivered shared resources in a more distributed, open way than it has. I do resent the money spent, as I think with some leadership we could have had something great.

I am organising, as best I can, anything I think might be useful and leaving them for the next person to occupy my classroom. But I also found resources left by the previous incumbent that I’ve never touched.

Some of the memories I’ve got are stored in our class blog. As they get older I doubt there of much interest or anyone other than me. I will be a wee bit sad as they are lost or replace in the future.

I met this snail this morning. I am wondering why its trail is ‘dotted’? Was it hopping;-)

Read Show Don’t Tell by Curtis Sittenfeld ★★★★☆📚

Midlife stories from mostly well educated, well off American (USA) women. Often looking back as well as moving forward. Despite being set in such a different world, I was both absorbed & entertained.

I might have enjoyed it even more if I had staked time between the stories.

It looked like it was going to rain this afternoon, so we had a quick walk up to Jaw Reservoir. Plenty of small heath butterflies about. Lots of foxgloves and plenty of bird song. A whinchat in a dead larch near the water.