Walk to greenside in the autumn rain yesterday. Low cloud & mostly very dull, made the few yellow leaves and other colours pop a bit.

Walk to greenside in the autumn rain yesterday. Low cloud & mostly very dull, made the few yellow leaves and other colours pop a bit.

Read: And He Shall Appear by Kate van der Burgh ★★★★ 📚

Working class boy is dazzled by Cambridge & his magician, occultist “friend”. Page turner, dark academia.

Some people say we’re our true selves when we think nobody is watching. But how do we know our own identities without others’ confirming gaze? If, like the tree falling in the proverbial wood, nobody is around to hear us, is our story a story at all? And when were different things to different people, what then?

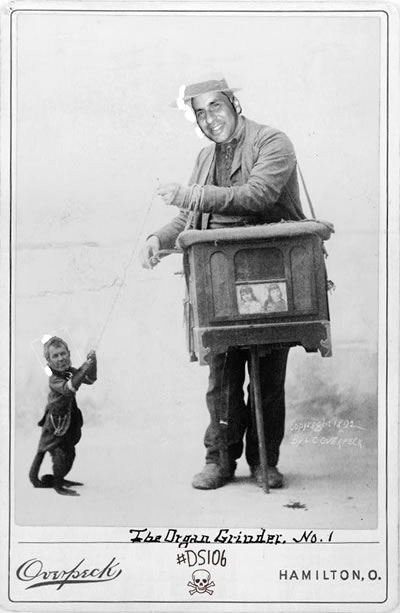

#TDC1968 see also dancing

see also personal.

I’f I’d the skills this would not be a terrible edit and it would be animates. I do dance to a lot of Alan‘s Tunes.

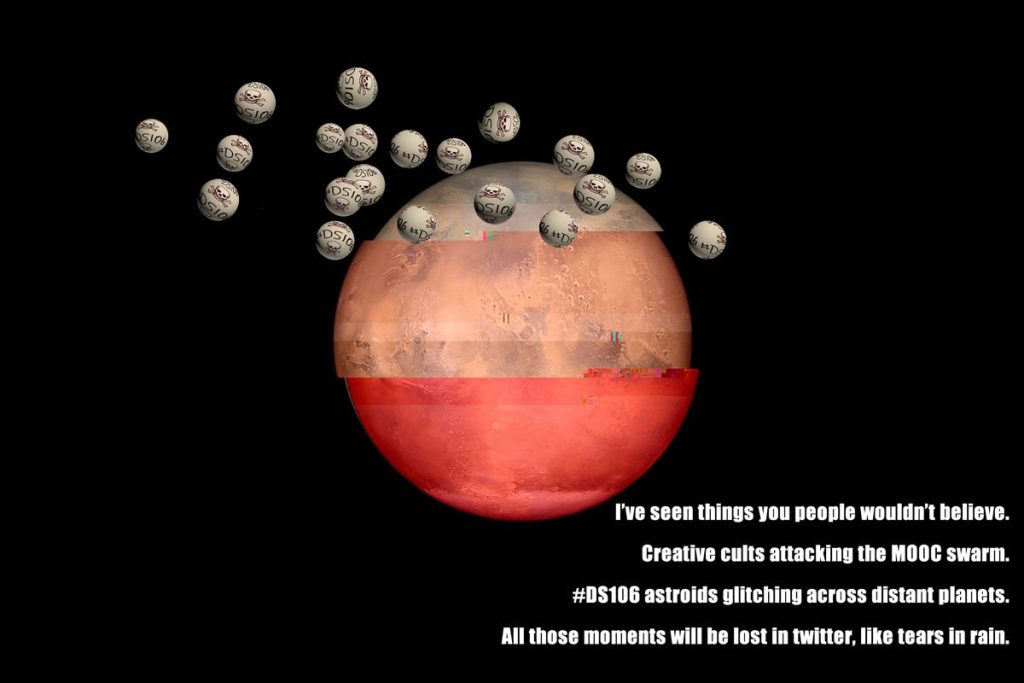

I’ve had a lot of fun and learning to make Jim Groom Dance. This is a reasonable example of how ds106 can lead you down strange paths of learning, and community. Jim took this in good part.

#tdc1919 Celebrity Heads in a Jar | The DS106 Daily Create

Making fun of DS106 leaders can get quite personal, see also socks and dancing.

There is a DS106 Daily create score board, I was on the top once:

Make America Gif Again #tdc2392 #ds106 @jimgroom still dancing;-) See also, dancing, personal

One of the best parts of DS106 is what some call remixing & I think of as riffing. Many of these are lost in the depths of delted twitter feeds, but one I recall was record riffing. Which ended up with this:

One of the most delightful things that can happen in DS106 is someone can take what you make and change or improve it, one of my favourites, #tdc1713 Get Your Mozart On: Compose an Imaginary Musical Manuscript | The DS106 Daily Create:

This was much improve by Todd (lost on twitter) and made really musical by Viv.

In another post about this sort of thing I wrote

For me ds106 is a bit like non competitive tennis with self replicating balls that can be played on any court you like with any rules you like with the addition of be nice.

from: Boing – 106 drop in

Read Americana by Chimamanda Ngozi Adichie ★★★★ 📚

Love story that investigates class, race, sexism & history in Africa, USA & Britain

Obinze saw himself through Vincent’s eyes: a university staff child who grew up eating butter and now needed his help.

Or DS106 look back in bewilderment

My path to DS106 is lost in the haze. The First mention this site is animating gifs on a rainy afternoon.

At that point I’d been reading around ds106 for a while.

I suspect my intro came from the flowering of web2.0 in education I was certainly reading Alan in 2006 and remember reading about Jim’s involvement in The Peoples Republic of Non-Programistan.

My first round was most encouraged by this idea: “#tdc2706 #ds106 “Welcome aboard, do what you like and leave the rest.” The Word according to @jimgroom

Once I started I’ve not really stopped, I’ve participated in several rounds of DS106 classes, finishing some, some not. My responses are scattered over service, Twitter, Flickr, YouTube, mastodon & my blogs. I don’t respond much now, but I try to use my blog for anything I want to keep. DS106 has taught me about the impermanence of the web and how a domain of my own gives me a little stability.

I do not think there has been an online experience that has been as educational as DS106. It took me in to podcasting, aggregation, WordPress and more. It gave me the most positive experience of online learning &connection.

Reclaim Hosting was set up to help people to take control of their online presence. Tell us, in your own voice, why creativity and openness are important to you. Use any tool you like – we like Vocaroo.

A quick GarageBand Music Attribution-NonCommercial 4.0 International reggae dub piano by XHALE303

#SilentSunday

Find the TDC that has been least responded to or ‘Least popular’ (the opposite of https://daily.ds106.us/popular/) and add a response to it. Help it feel the #WildDS106 love!

A convoluted one, I downloaded the rss feeds for the tags from the last 100 #dailycreates and counted the items for each there were none for tdc4993 so I made this. I went through look back through the toots by @creating and lo, there were plenty of replies. It was fun working out how to download 100 feeds & count the items.

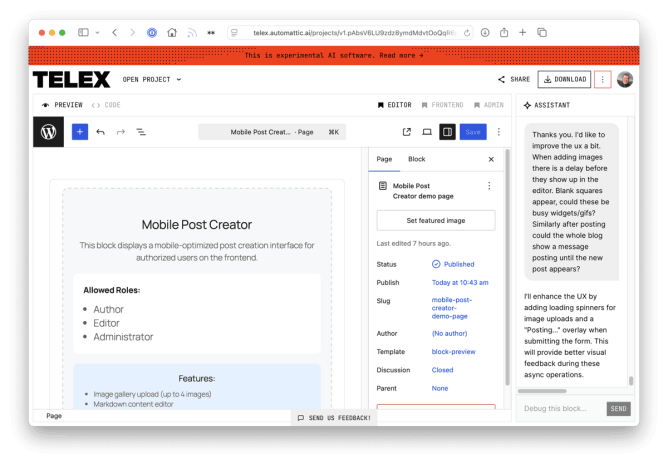

I tried Telex out a couple of times. The other day I had an interesting idea.

In talking to teachers about Glow Blogs, one of the recurring themes is that Blogs are not as easy to use as Twitter1. Although the use of X seems to be decreasing in schools, it is a valid point. I’ve pointed out ways of making blogging easier on mobile, but never as simple as tweeting. I certainly prefer blogging on the desktop myself.

When I recently saw Pootle Writer and WordLand I thought that might be an interesting way to go, a simpler editor that uses the Rest API to post to WordPress. I also use micro.blog which has a great mobile ui for posting from your phone in its app. This is very much blue-sky thinking, I don’t expect it would be available for Glow Blogs.

As none these products fitted exactly with my way of blogging2. I explored making my own external editor using a few AI tools. I got a couple working but never completely to my liking. Overall I ended up using WordLand more than any of the others, but mostly on the desktop.

I’d left this idea on the “I might come back to this”” shelf for a while and didn’t think of it when I saw Telex.

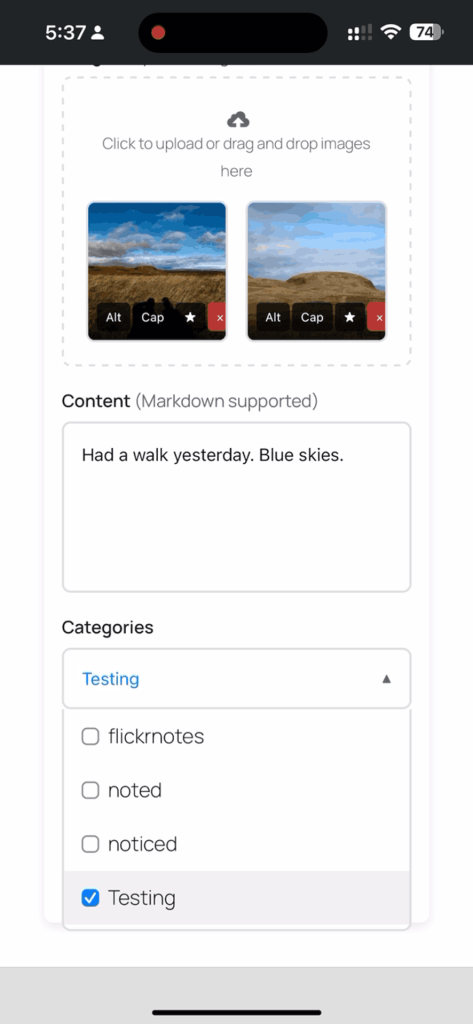

Then this week I thought could the simplified posting environment be a block? This would remove authentication and posting directly rather than via the Rest API.

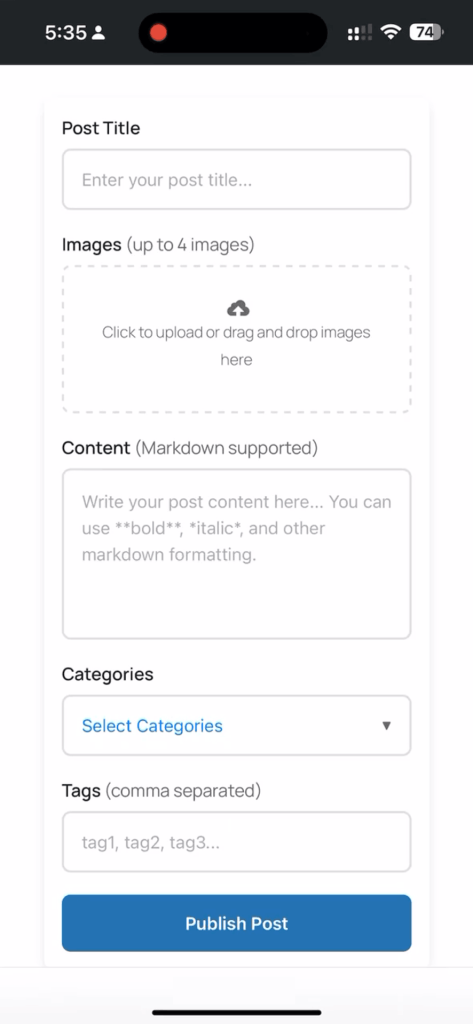

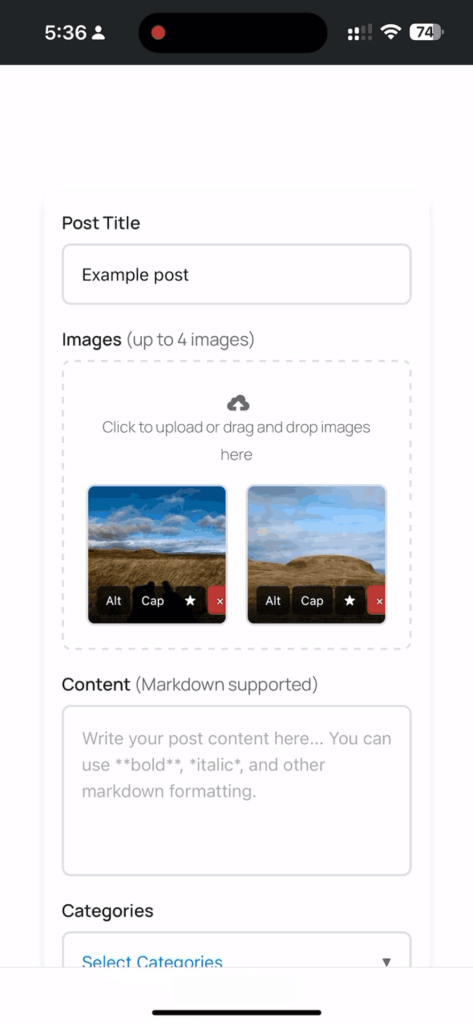

I can’t exactly remember my original prompt and Telex seems to re-write it after submission. Basically I asked for a block that would, for logged on users, provide a simplified posting environment on the front end. Fields for title, body and up to 4 images. The images would be in a gallery. Selectors and inputs for tags and categories. Images would be resized to 1200 pixels max. Markdown could be used.

I think I had a conversation that went on over the next couple of days3.

The first iteration worked but produced a classic editor block. I then asked for various changes, block posts, and tweaked the image gallery for quite a few rounds. Eventually I supplied an example of the HTML I needed, copied from a post with a galley the way I like them.

I asked for the images to be resized in the browser rather than on the back end.

Then I installed the plugin on a test server and tried it on a phone. This led to tweaking the interface a bit more. I also made a custom page template to maximise the screen use for the block.

I then ran the Plugin Check plugin which found a few errors. This led to a lot of repetition, as one error was solved another popped up. These were mostly to do with sanitization.

Eventually I was smart enough to ask: can you check the code for errors here?

Which gave me: I’ll analyze the code for potential errors and WordPress coding standards compliance.

I had to ask for results: How did that go?

Telex found some issues and listed them. I then asked: As I do not understand the code what would you recommend?

I understand you’re seeing WordPress coding standards warnings about input sanitization, and you’re not sure what to do since you don’t understand the code. Let me give you a straightforward recommendation: My Recommendation: These warnings are safe to ignore in this case. Here’s why:

This was followed by a detailed list of reasons. including:

wp_unslash(), sanitize_text_field(), absint()) to clean the data before using it.$_POST['images_data']and flags it, even though we’re sanitizing it properly with a dedicated function.Which seems good enough for me. It also chimed with the reports from the plugin checker.

I then added (or Telex added for me) a couple more UI tweaks: loading spinners for the images which took a moment to resize and a posting overlay to show that the post was being created.

Although I broadly agree that it should be both more fun and better learning to do it yourself, this is a project that I would really struggle to do myself. I’ve occasionally made very simple plugins, mostly shortcodes. One more complex one took me an age and had a steep learning curve. It is now broken. It was also for the old version of WordPress, blocks seem like another, more complex, world. Telex made a simpler block version for me quite quickly.

Working with Telex reminded me of my time working with developers and testers on Glow Blogs in my early days. Going back and forwards with requirements, tests and refinements. It was quite enjoyable.

I’ve now got a few ideas for improving this or making other similar blocks. For example, I make posts for books I’ve read. They are short and have a very predictable structure. A simplified posting environment for my phone could be useful.

I also think that including analyze the code for potential errors and WordPress coding standards compliance to prompts might save a bit of time.

If you are interested here is the project in Telex.

Repeat a challenge from the original inspiration for the TDC (this site can take quite a while to load)

@creating@daily.ds106.us #tdc5039 #ds106 recycling #ds579 Make a photograph that features a shadow as your subject today.

Had a walk yesterday with my daughter. The skies cleared for a couple of hours.