So yesterday evening I was waiting for my wife to join me to watch the TV. Earlier in the day I’d looked at the Daily Create. This was a challenge to use ICM. I read the suggested information on Intentional camera movement and realised I had none of the suggested equipment. A quick search found several articles about using the Slow Shutter app on an iPhone. I not only had my phone in my hand, I already had the app.

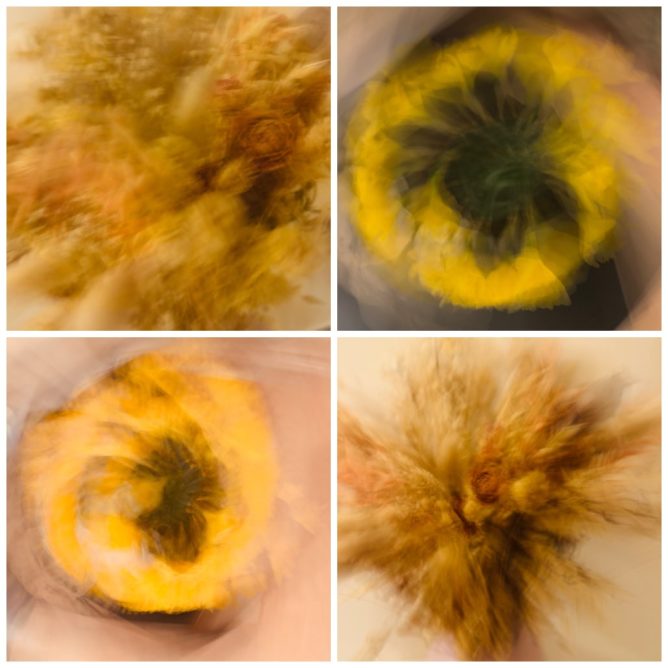

As it was dark outside and I only had a few minutes, I played around with a vase of daffodils and a bunch of dried flowers in the living room. I can’t say I was completely enamoured with the results, but could see it might be fun to play around some more.

I thought to share one of the photos on Mastodon in response to the Daily Create. Looking at the pictures I’d taken I thought a grid of four might be nice. I could have just uploaded four photos to a toot, but decided to run a shortcut to combine the images. It then turned out the shortcut was broken. I had a quick look and it seems okay, but it was old. I’d not used it for a few years. Not being one for shortcuts I didn’t think I could fix it in the couple of minutes before my wife arrived and we would start watching.

If I’d been on my Mac I’ve got many ways of doing this in less than a minute, but I am on my phone. I briefly thought of combining in Keynote and exporting but that would be a faff on the phone.

I then thought Claude AI could probably make an artefact that I could use. Of course I could have just asked Claude to make me a combined image, but that, for some reason, didn’t cross my mind. I guess there are a myriad of websites that would do the same too.

Can you make a one-page website that will allow a user to select images and turn them into a grid to download

After that, I had to report the download didn’t work on my phone, twice, before the working webpage was made. I used the artefact to make my wee grid and popped it onto Mastodon.

It took me a couple more minutes to copy the code and add it to my Raspberry Pi, without leaving my chair. The Termius app let me connect to the Pi, create a new file, and paste in the code. Image Grid Creator.

I guess in a few more minutes of internet time, this sort of ridiculous workflow will be simplified and everywhere.

Like everyone else, I’ve been reading a lot for and against AI. I think it is very hard for most of us to know where this is going. I’ve not really dived in, but I’ve not ignored it. I’ve not paid for it either.

I’ve used AI to help think through options for buying a car, made a few web pages, and consulted it on shell commands and regex. I’ve enjoyed using it most when I’ve made something myself, but discussed approaches, asked for code snippets, or syntax checking of my own failed snippets.

The latter is where I’ve enjoyed it most. There is a lot of satisfaction in getting markup or simple JavaScript to work. It is not work for me but a wee hobby. Handing over to AI completely would not be much fun and would have a lot less satisfaction.

I’ve also enjoyed using telex.ai to make simple WordPress blocks. This process would be beyond my skills, but I can act as a product owner. I also know enough to stop telex running in circles.

Where this leaves us, I’ve no idea. Watching politics live on the BBC this lunchtime, I was not impressed with any of the politicians’ responses to questions around getting AI to pay for creative content it has scraped. I can’t say I’ve a better idea or understanding. Certainly interesting as well as ridiculous times!